This page has some notes on changes to our DNS server names and addresses around the end of 2018 / start of 2019.

The old Computing Service domains

csx.cam.ac.ukandcsi.cam.ac.ukare to be abolished, so the DNS servers must be renamed. The University-wide service TLDdns.cam.ac.ukhas been allocated for the new names.We have a new IPv6 prefix so we must renumber all IPv6 service addresses. The prefix 2a05:b400:d::/48 has been allocated for DNS servers.

The data centre network is being partitioned into improve network-level security. This also entails some IPv4 renumbering.

Longer term we plan to move recursive DNS out of the server network, and make it an anycast service in the CUDN core.

Improvements

Make it more clear from their names which servers are test and which are live.

More distinct naming between normal RPZ filtered and special unfiltered recursive service.

Dual-stack service addresses for all servers. (I considered making the back-end IPv6-only, but we can't currently rebuild servers from scratch without IPv4.)

Use the same on-site authoritative server in zones that only list one. For example, at the moment

cam.ac.ukusesauthdns0whereasic.ac.ukusesauthdns1, which is sub-optimal becauseauthdns1lacks glue in the parent zone.Centralized zone transfer fan-in / fan-out servers, to allow for more dynamic (cloud-friendly) configuration of the other servers.

Current status, 2019-12-18

Delegation updates mostly done. The remaining ones are cam.ac.uk

itself, and a number of JANET reverse DNS zones. The delegation

updates were delayed waiting for our superglue delegation management

scripts to be rewritten, which did not become urgent until the last

quarter of 2019.

At the moment it looks like the auth/xfer split will happen before the IPv6 renumbering.

Current status, 2019-01-17

Server rename and upgrade done.

Delegation updates are the next task.

Not currently known when renumbering will happen - there are dependencies on activities in other parts of the UIS.

Splitting xfer servers from auth servers, and moving rec servers to anycast, will probably happen along with the renumbering.

Constraints

Traditional recursive service addresses cannot change.

The authoritative server addresses are difficult to change because they are wired into a lot of secondary server configurations. We can loosen this constraint by separating authoritative service (the name servers and addresses in zones) from zone transfers (the addresses in secondary server configurations).

The authoritative server addresses should (continue to) appear from outside to be on separate subnets. (In practice they are on the shared server infrastructure, but that is resilient enough for this purpose.)

We should avoid having to renumber the servers again, which probably means moving service addresses into 131.111.8.0/25 from 131.111.9.0/24, and into 131.111.12.0/25 from 131.111.12.128/25 so that the evacuated ranges can be recycled. (Ideally 131.111.8.32/28 and 131.111.12.32/28 if possible.) This mainly affects the test authoritative servers and the unfiltered recursive servers.

Where we don't know the post-renumbering IPv4 addresses they are marked ??? below.

IPv6 subnetting

2a05:b400:d:a0::/60 - auth.dns subnets x16

2a05:b400:d:d0::/60 - rec.dns anycast subnets x16

2a05:b400:d:10::/60 - link subnets to hardware x16

2a05:b400:d:70::/60 - back-end systems on data centre subnet(s)

This could alternatively be DNS-specific subnets in the server /48, e.g. 2a05:b400:5:d0::/60

Authoritative servers

Only one of our auth servers, authdns0, is listed in the cam.ac.uk

NS records, which means the other, authdns1, lacks glue in the

ac.uk zone. So it makes sense to use the same server in all zones

that only use one of our authoritative servers, for faster resolution.

This mainly affects the zones that we provide secondary service for.

They are going to have to change things for the renaming /

renumbering, so it makes sense to switch them from authdns1.csx to

auth0.dns.

authdns0.csx -> auth0.dns

131.111.8.37

2001:630:212:8::d:a0 -> 2a05:b400:d:a0::1

authdns1.csx -> auth1.dns

131.111.12.37

2001:630:212:12::d:a1 -> 2a05:b400:d:a1::1

I considered changing the instance numerals on these servers to

indicate which test server is on the same subnets as which live

server, but I decided that it was more convenient to keep the numerals

distinct so that the -test suffix can be (optionally) omitted.

authdns2.csx -> auth2-test.dns

131.111.9.237 -> ???

2001:630:212:8::d:a2 -> 2a05:b400:d:a0::2

authdns3.csx -> auth3-test.dns

131.111.12.237 -> ???

2001:630:212:12::d:a3 -> 2a05:b400:d:a1::2

Recursive service addresses

There are four sets of service addresses, two live, two test. Each has two views, standard (with RPZ) and raw (no RPZ). Each has two IPv4 /32s (one for each view) and an IPv6 /64 (covering both views).

The numerals indicate that corresponding rec and raw services are

views in the same set of service addresses. The IPv6 subnets match

similarly. In v6, b is for "with RPZ blocks" and c is for "clear".

The test servers have different numerals to indicate that they are different sets of service addresses and different IPv6 subnets. (Previously their numerals indicated subnet 8 vs subnet 12, and rec vs raw, but we are moving away from that meaning.) There's no particular need for stability of test server IP addresses, though the IPv4 addresses need to be in ranges that support anycast.

This scheme can stay the same before and after the switch to anycast.

Aliases

rec.dns == rec0.dns + rec1.dns

raw.dns == raw0.dns + raw1.dns

0 - Live

recdns0.csx -> rec0.dns

131.111.8.42

2001:630:212:8::d:0 -> 2a05:b400:d:d0::b

recdns2.csx -> raw0.dns

131.111.9.99 -> ???

2001:630:212:8::d:2 -> 2a05:b400:d:d0::c

1 - Live

recdns1.csx -> rec1.dns

131.111.12.20

2001:630:212:12::d:1 -> 2a05:b400:d:d1::b

recdns3.csx -> raw1.dns

131.111.12.99 -> ???

2001:630:212:12::d:3 -> 2a05:b400:d:d1::c

2 - Test

testdns0.csi -> rec2-test.dns

131.111.8.119 -> ???

2001:630:212:8::d:fff0 -> 2a05:b400:d:d2::b

testdns2.csi -> raw2-test.dns

131.111.9.118 -> ???

2001:630:212:8::d:fff2 -> 2a05:b400:d:d2::c

3 - Test

testdns1.csi -> rec3-test.dns

131.111.12.119 -> ???

2001:630:212:12::d:fff1 -> 2a05:b400:d:d3::b

testdns3.csi -> raw3-test.dns

131.111.12.118 -> ???

2001:630:212:12::d:fff3 -> 2a05:b400:d:d3::c

Recursive server hardware

At the moment each recursive server is multihomed on both VLANs (and corresponding IPv4 and IPv6 subnets) in which the service addresses are allocated. The addresses will remain the same until we switch to anycast.

The important feature of these is they are physical tin in particular locations so the rec seems a little bit redundant.

Two of the servers are to be moved, at which point they will be

renamed again, with a -b suffix so there will be -a and -b

servers in the same location.

- recdns?-cnh.csi -> cnh-a{8,12}.dns

- recdns?-rnb.csi -> rnb-a{8,12}.dns

- recdns?-sby.csi -> sby-a{8,12}.dns

- recdns?-wcdc.csi -> wcdc-a{8,12}.dns

For the anycast setup, there will be (new?) servers in different

locations (hence different names). I expect I will use a different

suffix (other than -a or -b) to indicate they are anycast rather

than keepalived. They will have IPv6 addresses in 2a05:b400:d:10::/60

subnetted per location. IPv4 addresses TBD.

New zone transfer servers

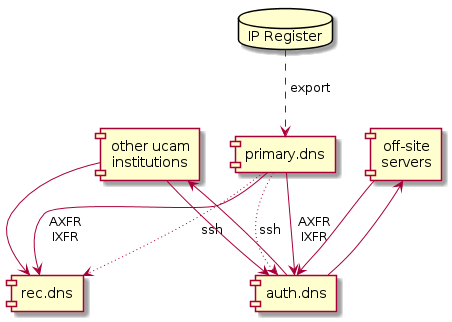

In the current setup, the arrangements for zone transfers between servers is relatively complicated.

Our auth servers act as a zone transfer relay for the zones run by the UIS, so that other servers are not transferring directly from the primary server. The primary server is relatively privileged, so we do not want non-UIS systems to be able to access it at all.

The auth servers transfer our zones to our off-site secondaries, and receive zones from off-site servers for which we provide secondary service. There is a similar bidirectional arrangement for on-site DNS servers at other University institutions.

The recursive servers act as a stealth secondary for our zones and for most other University zones.

Problems

The test auth servers are not able to secondary all the zones that the live servers do, because they are not listed in the necessary ACLs. This means they always have a slightly broken configuration, which isn't ideal for a test server.

The recursive servers transfer our zones directly from our primary server, so they are not configured in the same way as we recommend for other stealth secondaries.

Our recommended stealth secondary configuration suggests transferring zones from four different places, which is more complicated than it could be.

The automatically configured zones for our auth and rec servers are a bit too complicated to fit comfortably into the "catalog zone" model, so they are reconfigured via

ssh. If we can simplify their configuration we can simplify the trickysshpermissions and ACLs.Commercial RPZ providers typically have a strict limit on the number of servers that may transfer their zones. We need to run a fan-out server for their zones, and we don't currently have a good option.

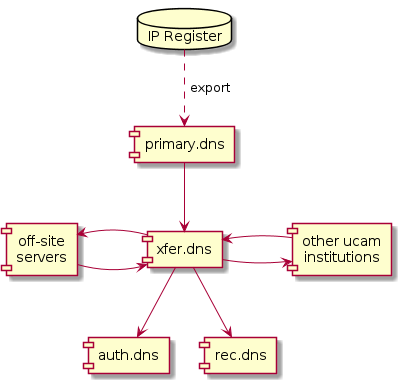

Future

The new plan looks something like this:

The new central xfer servers will provide only zone transfers.

They will send NOTIFY messages eagerly to any recent xfer clients, rather than having a fixed NOTIFY recipient list.

The xfer servers will be listed in ACLs for off-site secondaries, RPZ providers, etc.

The external dependencies for the auth servers will be reduced their

names (in zones) and glue addresses for the cam.ac.uk zone.

The new setup will allow (test) instances of auth and rec servers to be created and destroyed without reconfiguring the rest of the system.

We'll simplify the recommended stealth secondary configuration to transfer all zones from the xfer service.

DHCP servers

These need IPv4 service addresses (currently RFC 1918); they will need IPv6 addresses so that they are reachable from the admin server.

dhcp[01].csi.private -> dhcp[01].dns

dhcp2.csi.private -> dhcp2-test.dns

New service names (vboxes hosted on public servers):

- dhcp[01].dns.private

- dhcp2-test.dns.private

Back-end servers

These will have IPv6 addresses allocated from 2a05:b400:d:70::/60 subnetted according to the network security policy.

DNS "hidden" primary / admin server

- ipreg.csi -> pri0.dns

- gerpi.csi -> pri1-test.dns

database hosts

For moving off Jackdaw onto PostgreSQL; details TBD.

- db0.dns

- db1.dns

- db3.dns (maybe?)

- db-q.dns (maybe?)

Duplicated for -test servers

web hosts

For documentation, stateless stuff

- web0.dns

- web1-test.dns

Web front-ends

These will either be floating dual-stack service addresses hosted on the back-end, or the Traffic Managers will provide the front-end addresses.

database UI service names

CNAMEs pointing at suitable web server, either at web[01] or at a dedicated database front-end server.

- v3.dns (for existing UI ported off jackdaw)

- v4.dns (new UI)

Also -test versions for user accessible test endpoints

other web

- www.dns -> web0.dns

RPZ target

This has special firewall requirements (returning ICMP administratively prohibited errors except for port 80) Implemented as IP-based virtual hosts on web[01].

- block.dns

- block-test.dns

Hosted services

Redirector service for cambridge.ac.uk etc. is now on the Traffic Managers.

- ucam.ac.uk -> redirect.admin

Managed Zone Service to stay where it is for now, hosted on the MWS.

- mzs.dns -> mws-NNNNN.mws3.csx